Kappa with the Same Two Raters For All Observations

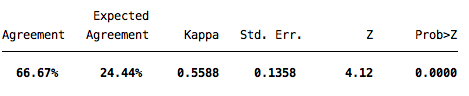

When the same two people rate all the data, the command is simply kap raterA raterB . Click here to download an example file (and follow along). The command above yields the following output:

The rating set in this example received a kappa value of .56 (rounded up).

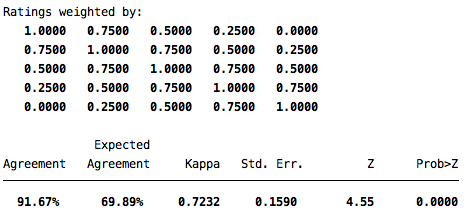

This particular data offers a nice illustration of one option for kappa as well: weighting. If you look at the values for the five possible options, there is a conceptual difference between one rater selecting angry and one selecting depressed, and one rater selecting happy and the other selecting depressed. Stata provides two types of built-in weighting, which basically tell the program that the difference between, for example, one rater selecting 2 and one selecting 3 is less disagreement than one rater selecting 1 and the other selecting 5. Both weight options are obtained using the wgt option. Specifying wgt(w) makes categories alike and specifying the wgt(w2) suggests that categories are very alike. When you run the command, Stata displays a matrix explaining what percent agreement each ratings pair was given. Below, I ran the command kap raterA raterB, wgt(w) which displays the following output:

The first section of the output is the rating matrix and the second is a kappa improved by noting that disagreements by one category are not 0% agreement anymore (in this matrix, they are 75% agreement).