IRIS login | Reed College home Volume 96, No. 2: June 2017

Study Dashes Bucket of Cold Water on Psychologists

Reed psych major Melissa Lewis ’13 was one of the authors of a groundbreaking study on reproducibility published in the journal Science.

A massive study by 270 researchers, including three Reed psychologists, underscores one of the key challenges facing scientists today: Just how far can you trust scientific research published in professional, peer-reviewed journals?

According to this project, you should take it with a chunk of salt.

The study, published today in Science, set out to examine a core principle of scientific research: the property of reproducibility. Two different researchers should be able to run the same experiment independently and get the same results, whether the field is astrophysics or cell biology. These results form the basis for theories about how the world works, be it the formation of stars or the causes of schizophrenia. Of course, different scientists may offer competing explanations for a particular result—but the result itself is supposed to be reliable.

Unfortunately, it doesn’t always work this way.

In this study, researchers set out to replicate 100 experiments that were published in three prestigious, peer-reviewed psychology journals. Some 97% of the original experiments reported statistically significant results, but only 36% of the replications yielded statistically significant results.

In other words, researchers were unable to replicate the original results in almost two-thirds of the studies they looked at.

“A large portion of replications produced weaker evidence for the original findings despite using materials provided by the original authors, review in advance for methodological fidelity, and high statistical power to detect the original effect sizes,” the authors concluded.

The study is sure to set off alarm bells in psychology labs around the world. “This one will make waves,” says Prof. Michael Pitts [psychology 2011–], who worked with Melissa Lewis ’13 to replicate one of the 100 experiments in Reed's SCALP lab. (The other Reed author is social psychologist Erin Westgate ’10 at the University of Virginia, who replicated another experiment.)

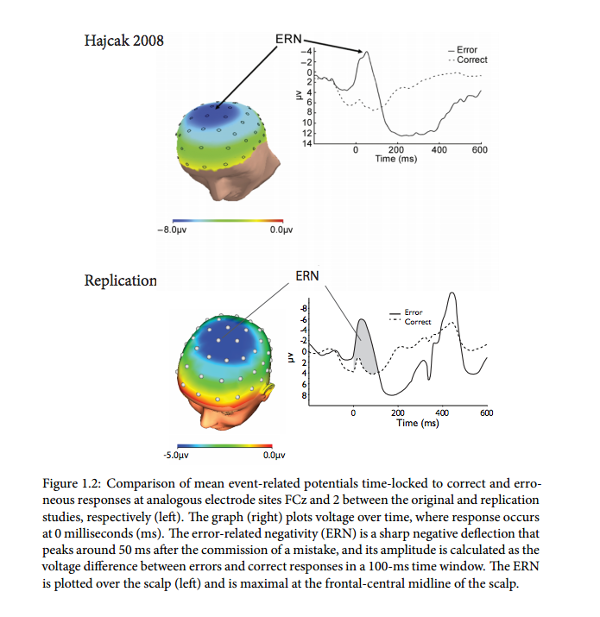

For her senior thesis, Melissa replicated a cognitive neuroscience experiment relating to the “error-related negativity response”—a brainwave that corresponds to the feeling you get when suddenly realize you’ve locked the keys in the car just as you’re slamming the door shut (colloquially known as the “Oh, shit” response).

The original experiment reported a strong correlation between this response and the so-called “startle response” that occurs when you’re surprised by a stimulus such as a loud noise. Melissa found a similar pattern in her results—but the strength of the correlation was weaker than reported in the original.

“The pattern was close, but not as strong,” she says. “This nicely encapsulates the problem of reproducibility. The reality is that science is noisy and unexpected factors can creep into your results.”

The study in Science identifies several factors that may account for the large number of failed replications. First, journal editors (like most people) are hungry for experiments that are novel and surprising, which puts pressure on researchers to publish results at the ragged edge of statistical significance.

Second, scientists today often stockpile vast quantities of data as a result of their experiments. Given hundreds or thousands of data points, it is often possible to find two variables that seem to be related, such as sunspots and the Dow Jones Industrial Average, when in fact the apparent relationship is essentially a matter of happenstance. “If you run enough t-tests, you’re going to find something significant,” says Prof. Pitts.

Although the study focused on psychological research, the authors suspect the phenomenon is widespread. “We investigated the reproducibility rate of psychology not because there is something special about psychology, but because it is our discipline,” they wrote. In fact, the replication project has inspired a similar initiative in the field of cell biology.

The study includes several suggestions for fixing the problem. Researchers should register their experimental protocols in a central database before they run the experiments, in order to discourage cherry-picking the results. Journals should put more emphasis on replication as opposed to seeking novelty above all else. Individual labs should consider delaying publication until they have internally replicated a new result, despite the negative consequences this delay might create for scientists seeking jobs, funding, and promotion. And, more radical suggestions include completely revamping the current scientific publishing system.

“I feel optimistic about the results,” Melissa says. “This shows the way to make better verification possible and to strengthen the way we do science.”

It is unusual for an undergraduate thesis to be published in Science, but Melissa has a knack for overcoming the odds. After dropping out of high school, she spent several years taking courses at her local community college in Cupertino, California, and worked up from fast food jobs like Little Caesars to clerical jobs as she saved money for college.

She visited Reed as a prospective student, but was crestfallen when she learned the cost of tuition. “I sat in the admission office and said, ‘I don't even know what I'm doing here. I could never afford this.’” She told her interviewer that she'd decided to withdraw her application, but he persuaded her to finish her application and request financial aid. She realized later the interviewer was Paul Marthers [dean of admission 2002–09].

Reed ultimately accepted her application and granted her “extremely generous” financial aid. At Reed, she majored in psychology and volunteered at Outside In. After graduation, she went on to work at the Center for Open Science and is now a data engineer at Simple, an online bank based in Portland.

Tags: psych, undergrad research, financial aid, STEM

LATEST COMMENTS

steve-jobs-1976 I knew Steve Jobs when he was on the second floor of Quincy. (Fall...

Utnapishtim - 2 weeks ago

Prof. Mason Drukman [political science 1964–70] This is gold, pure gold. God bless, Prof. Drukman.

puredog - 1 month ago

virginia-davis-1965 Such a good friend & compatriot in the day of Satyricon...

czarchasm - 4 months ago

John Peara Baba 1990 John died of a broken heart from losing his mom and then his...

kodachrome - 7 months ago

Carol Sawyer 1962 Who wrote this obit? I'm writing something about Carol Sawyer...

MsLaurie Pepper - 8 months ago

William W. Wissman MAT 1969 ...and THREE sisters. Sabra, the oldest, Mary, the middle, and...

riclf - 10 months ago